Stabilize IT & AI

The First Phase of Innovating Beyond Efficiency®

Building a Reliable Foundation for Innovation

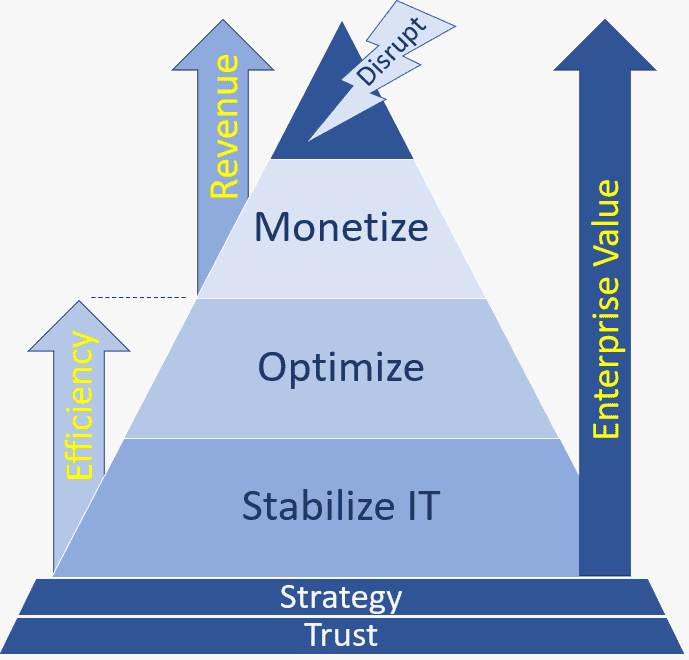

At Innovation Vista, we view technology strategy through the lens of our award-winning framework: Innovating Beyond Efficiency®. This framework is more than a methodology; it’s our mindset about innovation and the role of IT & AI in business success. It is the approach we take in our foundational CIO IQ® advisory service and the guiding principle across our Contract CIO+® leadership offerings, including Interim CIO, Virtual CIO, cybersecurity, AI, analytics, and digital transformation.

Within this maturity model, every organization’s journey begins with Stabilize IT. Before efficiency, revenue, or disruption can be pursued, IT must be a platform the business can count on every day. Only when infrastructure, systems, and data are reliable and secure is it wise to invest in optimization, monetization, or AI-driven innovation.

Stabilization as the First Phase

Stability is the foundation. Without it, every other initiative is at risk. Organizations struggling with recurring system crashes, data corruption, siloed or inconsistent data, or security gaps cannot hope to innovate with confidence.

This phase focuses on:

Ensuring cybersecurity protections and monitoring are in place

Assessing network and telecom architecture

Evaluating data center and cloud infrastructure resilience

Reviewing enterprise systems for completeness and reliability

Validating data quality, reporting accuracy, and integration

Aligning IT staff, vendors, and support processes to business needs

At this stage, IT evolves from a source of risk and disruption to a dependable foundation for the enterprise – one that earns the trust of both leadership and staff.

The Power of Reliable IT

Confidence in IT Unlocks Real Innovation

Every great innovation is built on stability. Our consultants have decades of experience stabilizing IT platforms across industries, transforming fragile environments into resilient ones. With IT stability, leaders can focus on strategy and innovation without fear that systems will collapse under pressure.

By aligning stabilization efforts with organizational goals, we ensure that technology not only avoids failure but actively supports growth. Stability is not glamorous, but it is the prerequisite that makes everything else possible.

A Track Record of Restoring Confidence

Innovation Vista’s consultants have guided hundreds of organizations through tech stabilization. Our consultants have helped clients eliminate recurring outages, modernize legacy infrastructure, remediate security vulnerabilities, and rebuild trust in IT. Not only do these improvements raise the impact of tech in client organizations, but these interventions have also unlocked billions in downstream ROI by enabling organizations to confidently innovate, optimize, and monetize their IT & AI capabilities.

This is the promise of Innovating Beyond Efficiency®: a journey that begins with stabilization, strengthens through optimization, and culminates in monetization.

Tech the Entire Organization Can Count On

Ready to Stabilize Your IT?

If your IT team or vendors have struggled to deliver a reliable platform, it may be time to bring in an experienced C-level advisor. Whether through CIO IQ® or Contract CIO+®, our mission is the same: Innovating Beyond Efficiency® – guiding you to stabilize IT as the foundation for efficiency, innovation, and tech- & AI-powered growth.

Contact us today to bring stability to your IT – the essential first step in preparing your organization for tech- and AI-powered growth.

Latest Articles

When Obsolete Tech is Worse than Broken Tech

In the high-stakes world of enterprise operations, there is a specific kind of silence that should terrify every C-suite executive. It isn’t the silence of

Beyond Vanilla · The Power of Tailored IT Recommendations

In today’s fast-paced and ever-evolving digital landscape, businesses must leverage technology to maintain a competitive edge. However, simply following generic best practices found on the

Critical Success Factors for CIOs & CTOs

In today’s rapidly evolving digital landscape, the role of the Chief Information Officer (CIO) and Chief Technology Officer (CTO) have become more critical than ever.